What Is IQ And How Is IQ Determined?

IQ is not a strange concept in the modern science field. Understanding what IQ truly represents and how it is assessed can be complex, often leading to differing viewpoints and interpretations. While IQ is commonly associated with intelligence, its exact nature and how it should be measured have sparked ongoing discussions among psychologists, educators, and researchers.

At its core, IQ is a numerical representation of an individual's cognitive abilities in relation to others in the same age group. However, the concept of intelligence itself is multifaceted, encompassing various mental abilities such as problem-solving, reasoning, and memory, which IQ tests aim to assess.

What Does IQ Mean?

IQ, or Intelligence Quotient, is an assessment of a person's cognitive abilities compared to the general population. It is derived from standardized tests designed to measure an individual's intelligence across various fields. By answering the different types of IQ questions in the reasoning, problem-solving, spatial reasoning, verbal, and memory fields, a numerical score is calculated to represent your IQ score. that is used to categorize individuals into different intelligence levels.

The term IQ was first introduced in 1912 by psychologist William Stern. IQ was shown as a ratio of mental age to chronological age (the age since you were born) x 100. If an individual is 20 years old, they have a mental age of 20, meaning that their IQ is 100 (the average IQ). If an individual is 30 years old, they have a mental age of 45, meaning that their IQ is 150.

How Is IQ Determined?

IQ is typically measured through standardized tests designed to assess human intelligence. These tests are designed to measure a variety of cognitive abilities, including reasoning, problem-solving, memory, and analytical skills.

Here are 5 key aspects of how IQ tests are designed and interpreted:

- Standardization: IQ tests are standardized to ensure consistent administration and scoring. This means that the test is administered and scored in the same way for everyone who takes it.

- Normalization: The scores on IQ tests are normalized so that the average IQ score is set at 100. This means that the majority of people will have IQ scores that fall within a certain range around 100, with fewer people scoring much higher or lower.

- Scoring: IQ scores are calculated based on the performance of individuals relative to others in the same age group. The most common scale used for IQ scores is the Wechsler Adult Intelligence Scale (WAIS) or the Wechsler Intelligence Scale for Children (WISC). These tests assign a score based on a person's performance compared to the average performance of others in the same age group.

- Distribution: IQ scores are typically distributed in a bell curve, with the majority of scores falling near the middle (around 100) and fewer scores falling at the extremes (much higher or lower).

- Meaning of Scores: For example, a score range of 85-115 would be at the 68%, meaning that your IQ is considered average compared to the general population. IQ scores are often standardized with a mean score of 100 and a standard deviation of 15, so scores between 85 and 115 are within one standard deviation of the mean.

The Key Elements Of IQ You May Need To Know

Let’s take a close look at the IQ distribution curve and the average IQ.

The Distribution Of IQ Scores

The bell curve, also known as the normal distribution curve, is often used to illustrate IQ scores and their distribution in a population. In the context of IQ, the bell curve demonstrates how IQ scores are distributed, with the majority of scores falling near the average and fewer scores falling at the extremes (very high or very low).

The bell curve is symmetrical, with the highest point, or peak, representing the average IQ score, which is typically set at 100. As you move away from the peak towards the edges of the curve, the number of individuals with those IQ scores decreases. The curve slopes downward on either side of the peak, indicating that fewer individuals have very high or very low IQ scores.

For example, on a standard IQ test, the distribution of scores might look like this:

- Scores below 70: 2.2% of the population

- Scores between 70 and 85: 13.6% of the population

- Scores between 85 and 100: 34.1% of the population

- Scores between 100 and 115: 34.1% of the population

- Scores above 115: 13.6% of the population

This distribution creates the characteristic bell shape when plotted on a graph, with the peak at 100 representing the average IQ score.

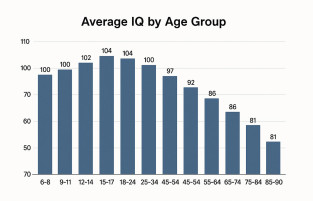

What Is Average IQ?

Many researches indicate that the average IQ score for humans is 100, reflecting a bell curve distribution where 68% of individuals fall within the range of 85 to 115. Scores between 85 and 100 are considered within the average range, while scores below 70 are indicative of intellectual disability. The average IQ is different depending countries, culture, and environment factors.

Conversely, scores over 130 are classified as high IQ, a distinction achieved by approximately 2% of the global population. This distribution illustrates the varying levels of cognitive abilities among individuals, with the majority clustered around the average score, and fewer individuals at the extremes of the spectrum.

2 Factors Influencing IQ

Intelligence Quotient (IQ) is influenced by a complex interplay of genetic and environmental factors. While genetic inheritance plays a significant role in determining IQ, environmental factors also play a crucial part in shaping cognitive development.

Genetic Factors: Genetic studies have shown that IQ is highly heritable, with estimates ranging from 50% to 80%. This indicates that a substantial portion of IQ variation among individuals can be attributed to genetic differences. Specific genes associated with intelligence have been identified, although their effects are typically small and influenced by multiple genetic variants.

Environmental Factors:

- Early Life Environment: Environmental factors during prenatal development and early childhood can have a lasting impact on IQ. Factors such as maternal nutrition, exposure to toxins, and early stimulation can influence cognitive development.

- Socioeconomic Status (SES): SES is strongly correlated with IQ, with individuals from higher SES backgrounds tending to have higher average IQ scores. This association is partly explained by access to resources, educational opportunities, and environmental enrichment.

- Education: Formal education plays a crucial role in shaping IQ, with higher levels of education often associated with higher IQ scores. Education provides cognitive stimulation, opportunities for learning, and the development of problem-solving skills.

- Nutrition: Adequate nutrition is essential for brain development, particularly during early childhood and adolescence. Malnutrition can lead to cognitive impairments and lower IQ scores.

- Stress: Chronic stress can have detrimental effects on cognitive function, including memory, attention, and IQ. High levels of stress, particularly during critical periods of development, can impair cognitive development.

- Cultural and Familial Influences: Cultural and familial factors, such as parenting style, language exposure, and cultural values, can influence cognitive development and IQ.

The relationship between genetic and environmental factors is complex, with interactions between the two playing a significant role in shaping IQ.

For example, individuals with a genetic predisposition for high IQ may benefit more from environmental enrichment, such as educational opportunities, than those without such a predisposition.

Importances of IQ Used In Real Life And Research

IQ, or Intelligence Quotient, is used in various contexts to assess cognitive abilities and predict academic and professional success.

- Educational placement: IQ scores play a significant role in educational settings. They are used to identify students who may benefit from advanced or specialized programs, such as gifted or talented programs. IQ tests help educators understand students' cognitive strengths and weaknesses, allowing for more tailored educational experiences.

- Employment screening: In some industries, particularly those that require high levels of cognitive abilities, IQ tests are used as part of the hiring process. These tests help employers assess candidates' problem-solving skills, analytical thinking, and ability to learn new information quickly. While IQ tests are not the sole determinant of job performance, they can provide valuable insights into a candidate's potential in certain roles.

- Clinical and neuropsychological assessment: IQ tests are widely used in clinical settings to assess cognitive functioning. They help clinicians diagnose intellectual disabilities, developmental delays, and cognitive impairments. IQ scores provide valuable information for treatment planning and intervention strategies, especially for individuals with learning disabilities or neurological disorders.

- Research: IQ scores are used in research studies to investigate the relationship between intelligence and various factors. Researchers use IQ tests to examine the impact of genetics, environment, education, and other factors on cognitive abilities. These studies help advance our understanding of intelligence and its implications for human development and behavior.

- Predictive validity: IQ scores have been shown to have predictive validity in certain areas. For example, higher IQ scores in childhood are associated with better academic achievement, higher income in adulthood, and even better health outcomes. While IQ is not destiny and many other factors contribute to these outcomes, IQ scores can provide valuable insights into potential trajectories.

3 Misconceptions About IQ

First of all, a common misconception about IQ is that it is a fixed and unchangeable measure of intelligence. In reality, IQ scores are not fixed and can change over time, especially during childhood and adolescence, as individuals go through development and learn. Factors such as education, experiences, and opportunities for intellectual stimulation can all influence IQ scores.

Second, IQ is the sole determinant of intelligence. While IQ tests measure specific cognitive abilities like logical reasoning and problem-solving, they do not encompass the full spectrum of human intelligence. Other forms of intelligence, such as emotional intelligence, creativity, and practical intelligence, are not assessed by traditional IQ tests.

Third, IQ scores cannot be influenced by outside factors, such as cultural background and socioeconomic status. Critics argue that IQ tests may be biased towards certain cultural or socioeconomic groups, potentially leading to inaccuracies in measuring intelligence across diverse populations.

Overall, while IQ can offer valuable insights into cognitive abilities, it is just one aspect of intelligence. A comprehensive understanding of an individual's abilities and potential requires consideration of other factors alongside IQ.

History and Development Of IQ Tests

IQ tests, though widely used, have a surprisingly young history. Their development can be traced back to the early 20th century, driven by a need to identify students who required additional assistance in school.

Early attempts at measuring intelligence

Even before formal IQ tests, there were attempts to categorize people based on their intelligence. Sir Francis Galton, a 19th-century scientist, tried measuring physical characteristics to assess intelligence.

The Binet-Simon scale: the first standardized test

The first true IQ test is credited to a French psychologist named Alfred Binet and his colleague Theodore Simon in 1905. The Binet-Simon scale wasn't meant to produce a single ‘intelligence score’ but rather aimed to identify students needing educational support. It assessed areas like memory, problem-solving, and attention, not directly taught subjects.

The rise of IQ and the Stanford-Binet scale

The concept of the Intelligence Quotient (IQ) emerged shortly after with German psychologist William Stern. He devised a formula to calculate a mental age relative to chronological age, which Terman later converted into a familiar 100-point scale.

Lewis Terman, an American psychologist, significantly revised the Binet-Simon Scale in 1916, creating the Stanford-Binet Intelligence Scales. This adaptation, along with Terman's use of the IQ concept, helped solidify IQ testing in the United States.

IQ tests today

IQ tests have undergone many revisions since their inception. They have become more standardized and cover a wider range of cognitive abilities. However, criticisms regarding cultural bias and limitations in measuring intelligence persist.

While IQ tests provide a snapshot of cognitive abilities, they are just one piece of the puzzle. They don't capture the full spectrum of human intelligence, which encompasses creativity, social skills, and other important aspects.

What Is IQ Test And How Does It Work?

An IQ test is a standardized assessment designed to measure a person's cognitive abilities and provide a score called IQ score. It aims to measure how well someone can reason, solve problems, and use information.

Here's a breakdown of how IQ tests work:

- Type of Questions: These tests involve various question formats, often including:

- Pattern recognition: Identifying patterns in sequences of numbers, letters, shapes, or images.

- Logic puzzles: Applying reasoning to solve problems with missing information.

- Verbal comprehension: Understanding written or spoken language.

- Spatial reasoning: Visualizing and manipulating objects mentally.

- Scoring: IQ tests are standardized, meaning they have a set procedure for administration and scoring. Scores are assigned based on the number of correct answers compared to the performance of a large representative group. The average score is set at 100, with scores above or below indicating how far someone's performance deviates from the average.

- What it measures: While IQ tests attempt to measure intelligence, it's important to understand they focus on specific cognitive abilities. These might include:

- Short-term and long-term memory

- Problem-solving skills

- Processing speed

- Analytical thinking

The Factors Affecting IQ Test’s Precision

Some factors that can affect the precision of IQ tests:

- Validity and reliability: IQ tests are designed to be valid and reliable measures of intelligence. Validity refers to whether the test accurately measures what it claims to measure, while reliability refers to the consistency of scores over time and across different administrations.

- Test construction: The quality of the test items and the way they are designed can significantly impact precision. Well-constructed tests have items that reliably measure the intended cognitive abilities.

- Test-taker factors: Factors such as fatigue, motivation, anxiety, and health can affect a test-taker's performance and, consequently, the precision of the test results.

- Limitations: While IQ tests provide valuable information about cognitive abilities, they are not without limitations. Factors such as cultural bias, test anxiety, and educational background can influence performance on IQ tests. Additionally, IQ tests may not capture all aspects of intelligence, such as creativity or emotional intelligence.

The Most Popular And Trustworthy IQ Tests

There are a few IQ tests that are widely recognized and considered reputable in the field of psychology. Here are some of the most popular and trustworthy IQ tests:

- Stanford-Binet Intelligence Scales: The Stanford-Binet Intelligence Scales are one of the oldest and most widely used intelligence tests. They are designed to measure cognitive abilities in children and adults, providing a comprehensive assessment of intelligence.

- Wechsler Adult Intelligence Scale (WAIS): The WAIS is a popular IQ test for adults, designed to measure intelligence across a range of abilities including verbal comprehension, perceptual reasoning, working memory, and processing speed.

- Wechsler Intelligence Scale for Children (WISC): Similar to the WAIS, the WISC is designed for children and provides a measure of their intellectual abilities across various domains.

- Raven's Progressive Matrices: Raven's Progressive Matrices is a non-verbal IQ test that is widely used in educational and research settings. It is designed to measure abstract reasoning and is considered to be culturally fair

- Mensa IQ Test: The Mensa IQ test is used by Mensa International, a society for people with high IQs. While the Mensa test is not as comprehensive as some other IQ tests, it is recognized for its difficulty and is often used as a screening tool for Mensa membership.

When it comes to online IQ tests, popularity and trustworthiness can be a bit more challenging to assess compared to traditional, standardized tests administered by professionals. Here are a few:

- Mensa IQ Test: Mensa offers an online IQ test that is widely recognized. While it's not as comprehensive as the official Mensa admissions test, it can still provide a challenging and indicative measure of one's IQ.

- PsychTests: PsychTests offers a variety of paid IQ tests and assessments that are developed by psychologists and are used by professionals for research and clinical purposes. While these tests are not free, they are considered to be more reliable and scientifically validated.

- IQ Tests Free: For a free online test offering instant, comprehensive results, this is the top choice. It features 3000 questions covering logical, numerical, and spatial fields, ensuring each test-taker gets a unique set of questions. Upon completion, participants receive a detailed report highlighting their strongest facet of intelligence. Besides, there are several websites that provide free versions of IQ tests, such as: IQTest.com, 123test.com. Although these tests are not official IQ tests, they provide a fun and challenging way to assess your abilities in many cognitive aspects

Ready to measure your intellectual abilities?

Take our online free IQ test to practice before tackling the official test or simply to gain insight into your IQ score range. Even if you're just curious about your IQ score and where you rank in the distribution bell curve, an online test can offer a starting point for exploration. Try our GBI online IQ test to prepare yourself before taking the official assessment, or simply to explore the range of your IQ scores.

In conclusion, IQ, or Intelligence Quotient, is a numerical representation of an individual's cognitive abilities compared to others in the same age group. It is derived from standardized tests designed to measure intelligence across various fields, including reasoning, problem-solving, spatial reasoning, verbal abilities, and memory. While IQ is commonly associated with intelligence, it is important to understand that it is just one aspect of intelligence and does not encompass the full spectrum of human cognitive abilities.